Among the many threats to human life and dignity, the Vatican is making sure that the widening use of lethal autonomous weapons systems worldwide is not reduced to a natural progression of technology that people learn to accept and live with.

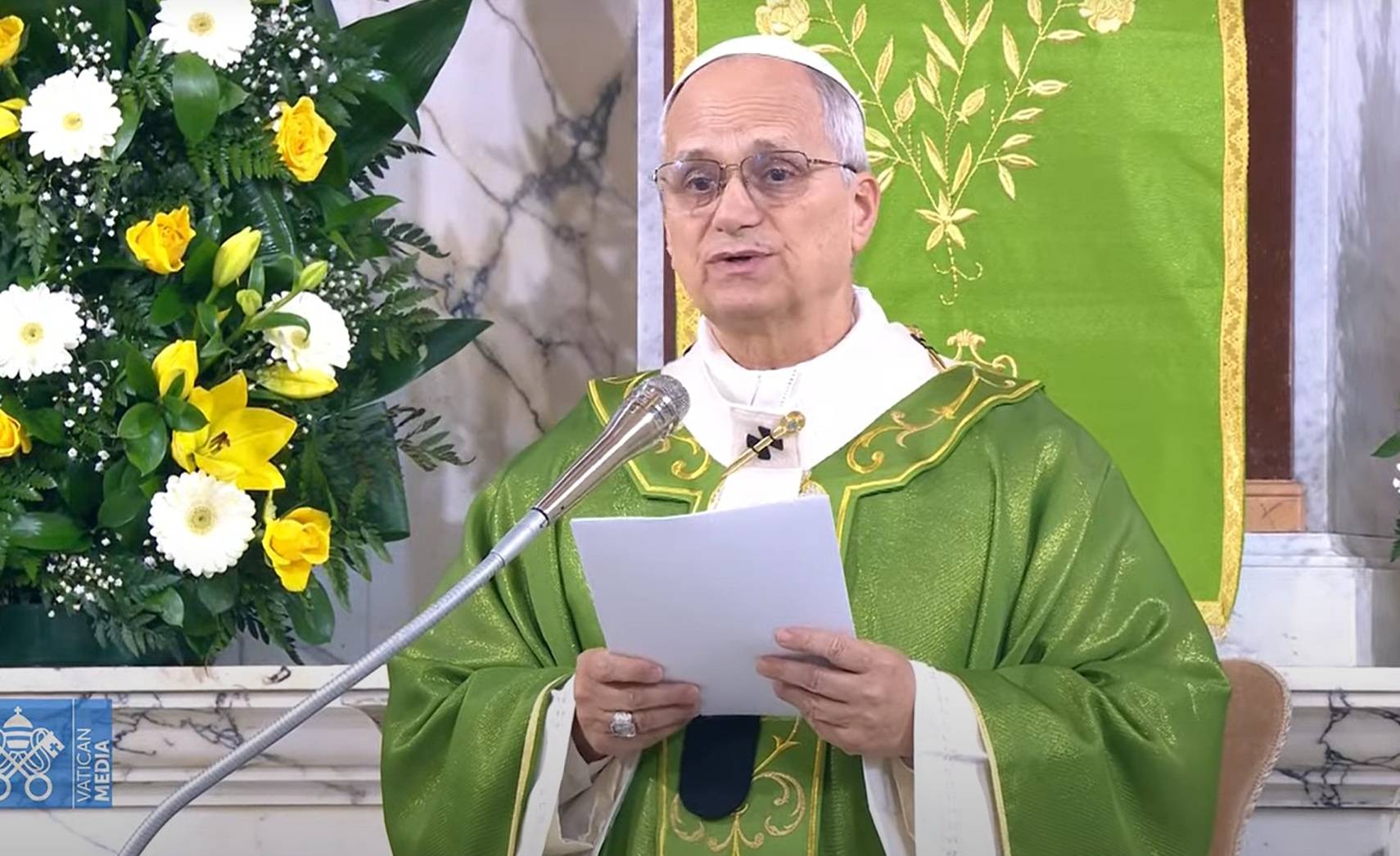

Vatican officials have joined Pope Francis in repeatedly expressing trepidation over such weapons, known as LAWS, saying their use poses a serious threat to innocent civilians.

The most recent caution against so-called “killer robots” came from the Vatican Permanent Observer Mission to the U.N. agencies in Geneva in early August during a meeting of the 2021 Group of Government Experts on Lethal Autonomous Weapons Systems of the Convention on Certain Conventional Weapons.

In the first of three daily statements to the group, the Vatican said Aug. 3 that a potential challenge was “the use of swarms of ‘kamikaze’ mini drones” and other advanced weaponry that utilize artificial intelligence in its targeting and attack modes. LAWS, the Vatican said, “raise potential serious implications for peace and stability.”

The statements were the most recent from Vatican diplomats at the U.N. in Geneva. Pope Francis also addressed autonomous weapons in his address to the 75th meeting of the U.N. General Assembly last September. In a world where multilateralism is eroding, he said, LAWS can “irreversibly alter the nature of warfare, detaching it further from human agency.”

Then in November, the pope focused his monthly prayer intention on artificial intelligence, or AI, and invited people to pray that any progress will always “serve humankind,” respect human dignity and safeguard creation.

It’s the rapid development of artificial intelligence in drones, missiles and other weapons that depend less on human control to determine who and what to attack that is driving a movement to rein in LAWS.

Disarmament advocates, including the Brussels-based Pax Christi International, contend that ethical and moral questions surrounding such systems have received scant attention in the race to develop fully autonomous weapons.

Because autonomous weapons can erroneously target innocent civilians and military noncombatants, the advocates are calling for lethal autonomous weapons to be added to the purview of the 40-year-old Convention on Certain Conventional Weapons. The sixth review conference on the convention is set for Dec. 13-17 in Geneva.

The convention already includes protocols on land mines, blinding laser weapons, and incendiary devices.

Leading the way on LAWS is the Campaign to Stop Killer Robots, an international coalition of 180 nongovernmental organizations from 68 countries. Pax Christi International is a participant.

“Many organizations and associations and government leaders would agree on the same thing, that life and death decisions cannot be outsourced to a machine. The Holy See has said it as well as anybody,” said Jonathan Frerichs, Pax Christi International’s U.N representative for disarmament in Geneva.

He told Catholic News Service it is unethical to believe “that we could pretend our future can be served by life and death decisions that can be turned over to an algorithm.”

The Catholic peace organization’s partner in the Netherlands, PAX, has devoted significant resources to limiting LAWS development.

Daan Kayser, project leader on autonomous weapons at PAX, called on peacemakers to be vigilant to prevent humanity from crossing a “red line” on any weapon that makes it easier to kill people. Mathematical algorithms in facial recognition software, he explained, can misinterpret the data being collected, especially when it comes to cloaked figures of people of color.

“We feel these sorts of decisions shouldn’t be delegated to machines,” Kayser told CNS. Weapons, he said, must remain under “meaningful human control.”

For the record, the U.S. bishops’ Committee on International Justice and Peace in 2015 called for international limits on targeted killings by drones in urging that norms guiding drone usage be developed. The stance was expressed in a letter to Susan Rice, national security adviser in the Obama administration. The committee has not specifically addressed LAWS.

The Campaign to Stop Nuclear Weapons reports that at least 30 countries, 4,500 artificial intelligence experts and the European Parliament support an international agreement to ban LAWS.

However, countries leading the way in the development of such weapons have opposed limits, arguing that they do not want to be left at a disadvantage. They include the United States, China, Israel, Russia, South Korea and the United Kingdom.

So do arms makers, said Maryann Cusimano Love, associate professor of international politics at The Catholic University of America in Washington.

“The arms makers and AI makers want to sell this stuff. They’re very pro-weapons sales, and then ethics, unfortunately, can be behind the curve. The time when these considerations should be brought in is in the development phase,” Cusimano Love said.

Robert Latiff, a retired U.S. Air Force major general, agreed that ethical concerns must be among the foremost considerations as autonomous weapons are developed, but he disagreed that an international agreement was needed.

“The only good of a treaty is if it could be verified. It would be impossible almost to verify a treat governing the use of AI in a system,” said Latiff, who is Catholic and teaches ethics of emerging technologies at the University of Notre Dame in Indiana.

He told CNS that the existing international laws governing warfare are sufficient to address the ethical concerns that surround the use of any weapons system. He cited Article 36 of the Geneva Conventions as the vehicle to judge the morality of introducing any weapon system.

The provision requires that a country developing a new weapon, means or method of warfare must review whether it would be prohibited under the conventions or other international law.

“What I do think might be useful for the big guys (nations developing LAWS) if they all agreed on nonproliferation. Then we might be able to get somewhere,” Latiff said.

“I don’t think we’re going to ban them. It’s like banning warfare. You’re not going to ban warfare,” he said, suggesting that countries with LAWS agree to limit their use to well-defined military battlefields.

“I’m enough of a realist to know that wars are going to go on,” Latiff said. “These things are going to continue to be developed and the best we can hope for is to control them in some meaningful way.”

The Catholic disarmament advocates, however, are pushing for a stronger moral stance to be included in the development of artificial intelligence. Cusimano Love said the moral questions being raised by the Vatican must be addressed in order to protect vulnerable people, largely women and children, from a mistaken attack.

“The Holy See has said we must look at broader ways of building peace,” she said. “How do you do that if you’re already using a weapons system that can expand violence, expand war?”

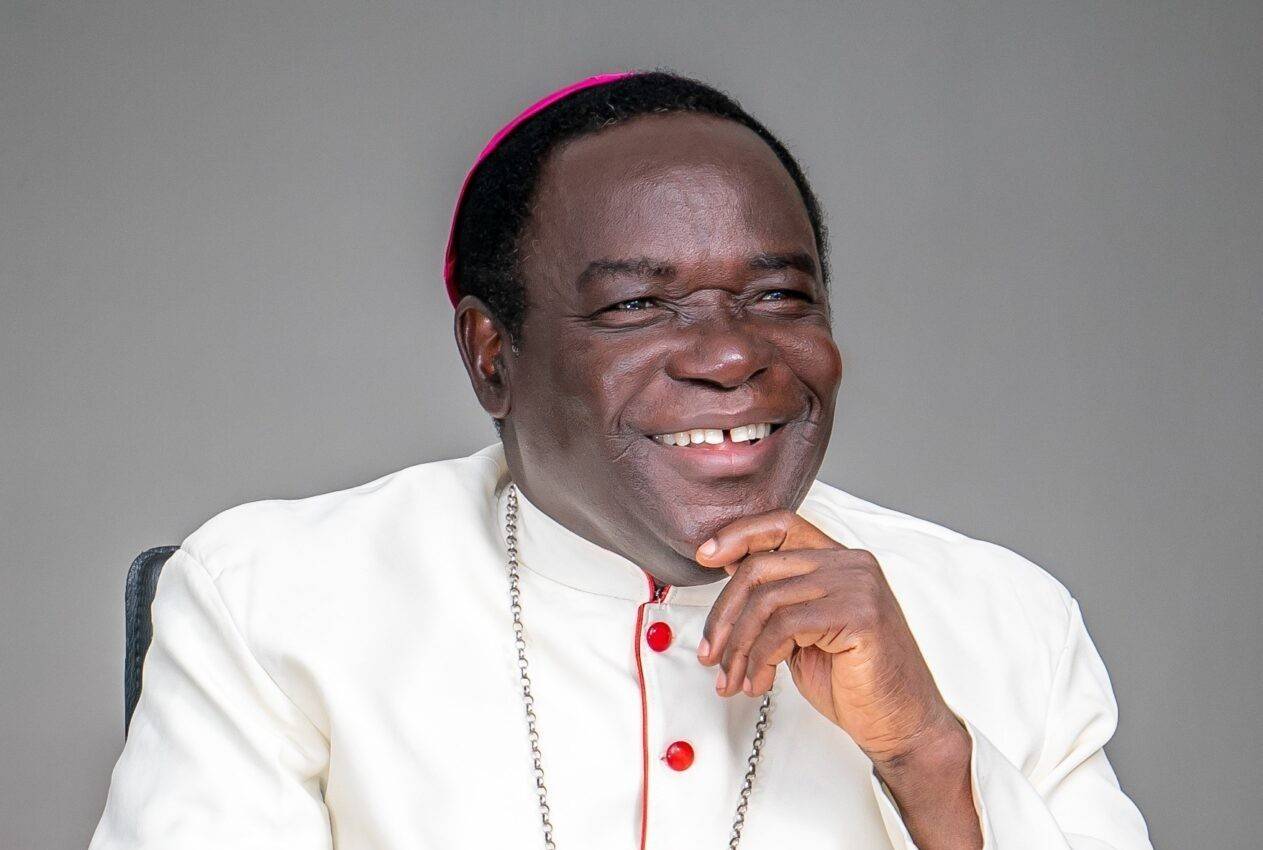

Another Vatican office, the Pontifical Academy for Life, convened a group of technology leaders in February 2020 to discuss artificial intelligence in the broader sense. The meeting led to the “Rome Call for AI Ethics,” in which the benefits of artificial intelligence were acknowledged but called for the development of algorithms guided by an “algor-ethical” vision from the start.

The document covers three ethics, education and rights, and is rooted in six principles covering transparency, inclusion, responsibility, impartiality, reliability, and security and privacy.

Signing the document were Archbishop Vincenzo Paglia, president of the Pontifical Council for Life; Brad Smith, president of Microsoft; John Kelly III, executive vice president of IBM; and representatives of the U.N. Food and Agriculture Organization and the Italian government’s Ministry of Innovation.

Pax Christi’s Frerichs said action to limit more sophisticated autonomous weapons is gaining momentum. He and others compared the work being done by proponents, the Vatican and key governments including Brazil to that which led to the Treaty on the Prohibition of Nuclear Weapons, which went into force in January, even without the world’s nuclear powers signing on.

“It took 70 years to get a legally binding instrument. The big powers are not joining it, but we can save the landscape,” Frerichs said.

“The goal of all this talk is to come with a law … to make the world a safe place, where there aren’t killer robots going around.”