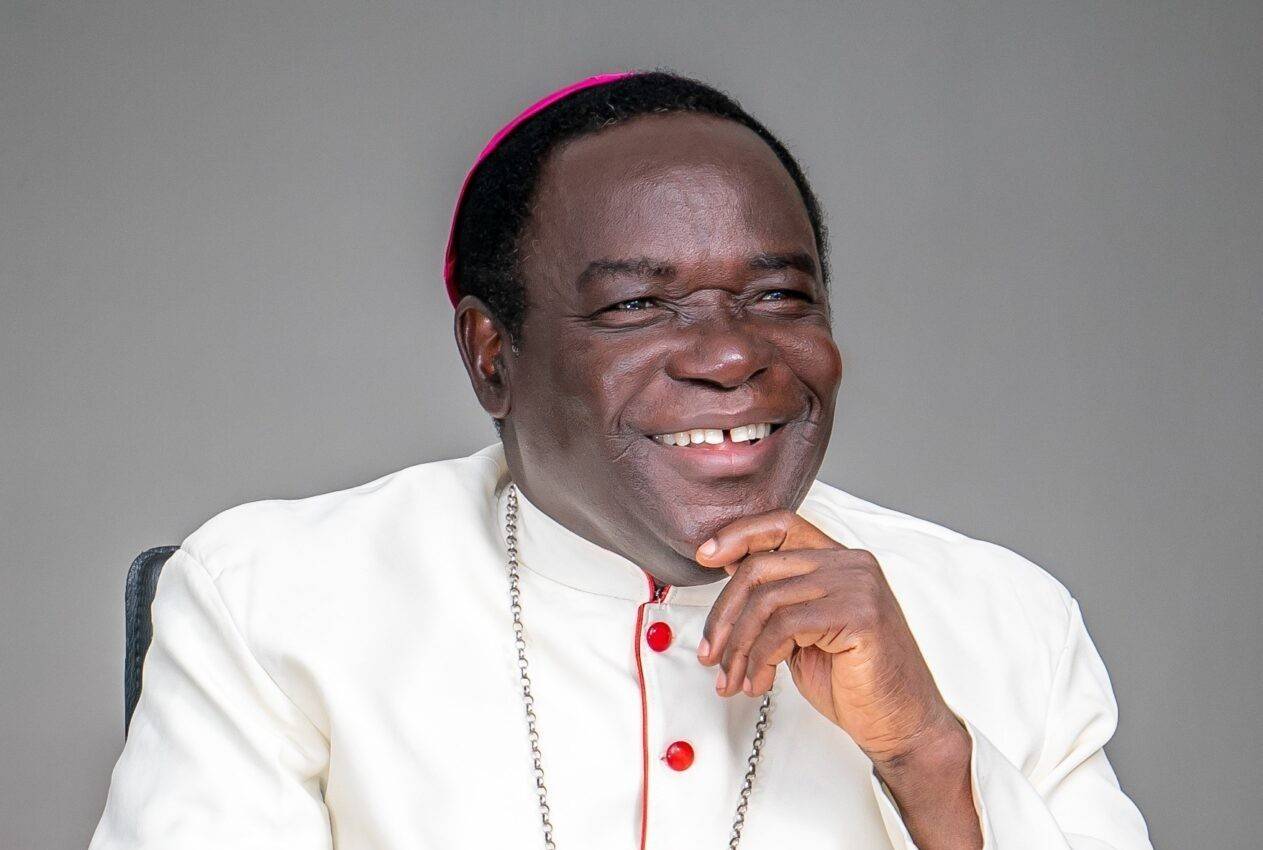

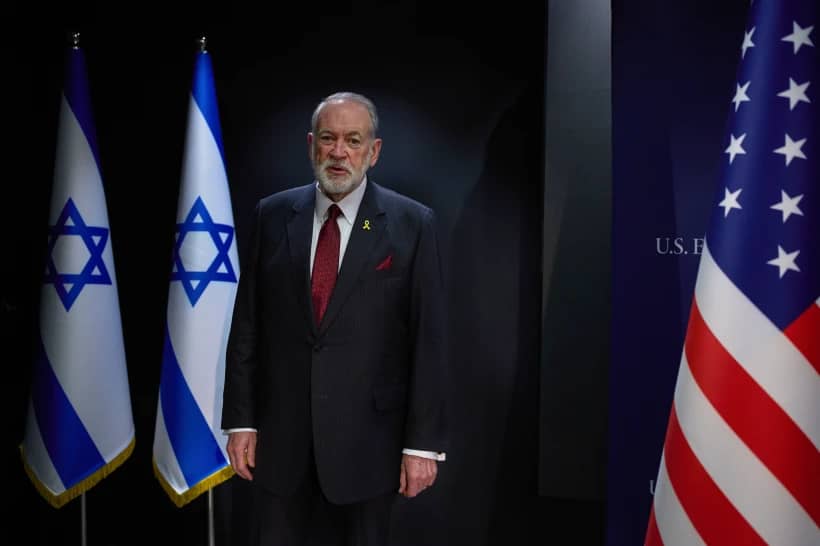

[Editor’s Note: Brian Patrick Green is the director of technology ethics at the Markkula Center for Applied Ethics. His work is focused on the ethics of technology, including such topics as artificial intelligence (AI) and ethics, the ethics of space exploration and use, the ethics of technological manipulation of humans, the ethics of mitigation of and adaptation towards risky emerging technologies, and various aspects of the impact of technology and engineering on human life and society, including the relationship of technology and religion, particularly the Catholic Church. He spoke to Charles Camosy.]

Camosy: Can you tell us how you became interested and indeed expert in AI ethics?

Green: My undergraduate degree was in genetics from the University of California, Davis, and I worked in molecular biology and biotech there, but ultimately I discovered that lab work was not for me. I had dreamed of being a scientist since I was a child, so this was very confusing and I didn’t know what to do with myself, so I made what turned out to be one of the best decisions of my life and joined the Jesuit Volunteers International. I later had it pointed out to me that I had unconsciously followed a piece of good advice – “If you don’t know what to do, help people.”

JVI sent me to be a high school teacher in the Marshall Islands for two years. The Marshall Islands have experienced and are experiencing the effects two particularly devastating technology-related disasters: US nuclear testing and climate-change induced sea-level rise. Roughly ten percent of the Marshall Islands remains seriously radioactively contaminated and sea-level rise is slowly sending the entire country under the waves.

The injustice was a constant realization while I was there, and I still think about it frequently. But the deeper realization of the causes and meanings of these events sunk into me slowly, and I only really became aware of it during my doctoral studies at the Graduate Theological Union in Berkeley. Human beings were taking the natural world – as discovered through good science and re-purposed in technology – and turning it into weapons, whether intentionally (as in nuclear weapons) or unintentionally (as in sea-level rise due to greenhouse gas emissions). CS Lewis puts it very succinctly in The Abolition of Man: “what we call Man’s power over Nature turns out to be a power exercised by some men over other men with Nature as its instrument.”

These experiences and insights make think that technology is the number one philosophical and theological question of our time. What humans have done by our own nature – making and using tools – has now put at risk the natural world, our civilization, and even, through biotechnology directed back on ourselves, our own nature itself.

So, I work on AI ethics because it has become a desperately important instantiation of the problem of how to properly use technology. Because I think this is one of the most important issues in the world, and because I am blessed to have a job at Santa Clara University that gives me the freedom and mandate to work on AI ethics, this is what I do.

What’s the latest with AI? Where is it heading?

For decades, AI seemed like a slow-moving academic sub-field stuck in a “winter.” But in the last few years it has exploded thanks to three converging and amplifying socio-technical trends. These three trends were: 1) A massive growth in data, 2) growing access to “compute” (computational power that made it possible to quickly run previously very slow algorithms), and 3) a growing number of sophisticated algorithms and the talented individuals who can write those algorithms.

These trends, individually, might not have caused much interest, but combined, they have caused AI to grow at a phenomenal pace. Also worth noting is that each of these trends individually tends to centralize power, and combined, they centralize power in an extreme way. For example, the form of AI known as machine learning requires very large data sets, and there are only a few organizations in the world capable of assembling these data sets: Governments and big tech corporations such as Alibaba, Amazon, Apple, Baidu, Facebook, Google, and Tencent.

Compute is similar: Massive computational power requires using megawatts of electricity on thousands of expensive chips. And algorithms, and particularly human talent that can write those algorithms (the few best programmers are like elite athletes or movie stars in terms of how in-demand they are), are again in very limited supply and therefore can only work for a few organizations.

If you wonder where AI is heading, these trends are likely to continue, and this, in turn will likely spark a backlash against technological centralization and misuse of power. We are already experiencing some of this “techlash” today. Tech corporations will need to improve their behavior, and/or governments around the world will step in to regulate them.

In my own work with corporations, I think there is a growing realization among many tech workers that technology ethics is becoming a key issue, but not all corporations have yet recognized the gravity of the situation. A few key leaders have, and they give me hope. But some tech companies – as well as governments – lack ethical leadership, and this is a serious problem that needs to be addressed soon.

In a recent blog post, you suggested that a primary driver here is “greed.” Could you say more about this?

One of the reasons that capitalism is such a dynamic and effective economic system is that it harnesses a natural human propensity towards selfishness – the vice of greed – and from that creates beneficial effects such as cheaper products, innovation, economic growth, etc. With respect to AI, AI permits massive gains in efficiency that were previously unattainable, for example, by targeting ads at those most likely to be persuaded by them, or figuring out new ways to use electricity efficiently. These gains in efficiency can be quite good – helping people learn about products they might need, and saving energy. However, those goods are often mere side effects of the deeper drive to make money, either by selling more of a product or reducing costs.

If the greedy impulse is subservient to social benefit, then society should benefit from AI. However, many organizations do not operate this way, seeking to make money regardless of positive social impact, for example, by using AI to power addictive apps, which can then lead to filter bubbles, political polarization, neglect of human relationships, economic loss, increasingly negative emotions, and a deteriorating social fabric.

If corporations cannot do the right thing on their own then they should be forced to act ethically, either by economic or political action upon them. I do hold out hope that self-regulation is possible, but I think some corporations still don’t “get it” and those corporations are quite capable of ruining the prospect of self-regulation for everyone else. And time is running short. Greed needs to be de-prioritized, and value for the common good needs to gain a new place of prominence, or we are in for worse techno-social “glitches” than we are already experiencing.

Obviously, these developments present a number of critical ethical issues. What ones do you see as particularly important to highlight? Could you expand on one or two of them that, in your view, are particularly urgent?

I have a “top ten” list of ethical issues in AI from 2 years ago, which I expanded to 12 issues in an academic article. Currently, in my graduate engineering AI Ethics course, I have 16 issues I cover: Safety, explainability, good use, bad use, environmental effects, bias and fairness, unemployment, wealth inequality, automating ethics, moral deskilling, robot consciousness and rights, AGI and superintelligence, dependency, addiction, psycho-social effects, and spiritual effects.

Given this swarm of issues, it is hard to reduce it to just one. But if I had to choose the most urgent, needing remedy right now, it would be the degrading integrity of the world’s information system (relating to the “bad use,” “bias and fairness,” and “psycho-social effects” categories above). Social media and the entire world media ecosystem need to be cleaned up as soon as possible.

Violence kills the body, but lies kill the mind. Misinformation and disinformation are flourishing on the internet and destroying the ability of humans to reason in a way that aligns with reality, reversing centuries of progress that came along with science and the pursuit of truth. This in turn is destroying our ability to cooperate and seek the common good together. It is stabbing at core aspects of our humanity: Our ability to think and cooperate. If we fail to correct this, human civilization could just plain fall apart.

You recently participated in a major Vatican conference on AI. What does the Church — and particularly Pope Francis — add to the discussion about these matters?

First, I think we should recognize how remarkable and vitally important it is that Pope Francis has taken an interest in technology at all. For centuries the Catholic Church was at the forefront of technological innovation (not only building cathedrals and other grand works, but pioneering metallurgy, chemistry, timekeeping, agricultural techniques, food preservation, the scientific method, and other core technologies for human development), yet it has never really had a deeper theology of technology.

Of Aristotle’s three realms of human intellectual activity, theoretical reasoning and practical reasoning have always had the Church’s attention, but reasoning directed towards the production of goods – in other words, technology – has been nearly ignored. Pope Francis has begun to correct this error, and for that the world can be grateful, I think. Rational production is a defining factor of modern life and if we do not consider it more deeply it will consume us, rather than us consuming it.

Additionally, the world thirsts for moral authority. The reputation of the Catholic Church, and particularly its hierarchy, remains badly damaged in this regard due to scandals. However, despite this rightful reputational damage, because the world is in a power vacuum right now when it comes to moral authority, the Church is one of the few institutions capable of saying anything.

And the Church’s history of deep thinking on ethics is desperately needed. Ideas that might seem simple to an ethicist can seem revolutionary to the unacquainted. Because the Church is one of the few global institutions that has ever taken ethics seriously – even if it has been consistently unable to live up to its own standards – it is also one of the few global institutions capable of providing the moral guidance necessary for making the world a better place. We can see this in the way that Catholic moral ideas have migrated into secular ethics over time; ideas like using treaties to restrict weapons of war, using double effect reasoning in medical ethics, and considering just war theory in international relations.

If the Church were capable of it, it should set thousands of theologians and philosophers loose on the questions of technology ethics, and AI ethics in particular. Unfortunately, the Church lacks the resources to do that. But what it does have is the ability to point and say “Everybody, look at this! Is this right? Can we make this better?” And by doing that, hopefully the Church will motivate people to make things better.

Whether the impact will be sufficient remains to be seen. Mere moral authority cannot fix the world. What is needed are concrete actions at large scale, and that will require the coordination of millions of people, billions of dollars, and years of hard work.

AI can be used, like all intelligence, for both good and evil. We need to act now to make sure we secure its use for good.

Crux is dedicated to smart, wired and independent reporting on the Vatican and worldwide Catholic Church. That kind of reporting doesn’t come cheap, and we need your support. You can help Crux by giving a small amount monthly, or with a onetime gift. Please remember, Crux is a for-profit organization, so contributions are not tax-deductible.